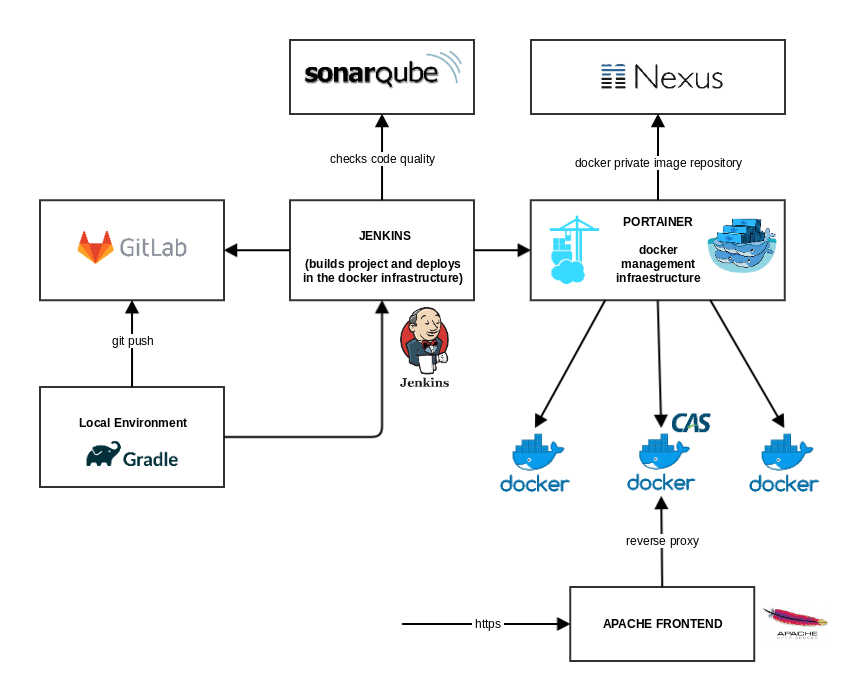

Devops lifecycle of a production service with Docker-based infrastructure

The main idea of the following example is to show a devops lifecycle of an infrastructure application in a production environment using Docker containers. As use case example to describe this lifecycle, we select a customized Apereo CAS 6 Server (Central Authentication System). The set of used open source tools are the following:

- CAS 6 is the app to deploy, we will configure CAS Server with openLDAP as authentication handler, and some minor customizations for the main forms of the application. In the project, we will use Gradle, an open-source build automation system used in CAS 6 overlay project, which helps to generate the war file for CAS. Some custom Dockerfile and docker-compose resources are used for the deployments of the packaged war file.

- Docker & Docker compose are the container technologies behind Portainer.

- Portainer is a web management solution for a Docker Swarm cluster, where different Docker images run and applications are deployed.

- Gitlab is used as control version system tool, where all our infrastructure project is saved as code. So we can clone our project locally, do some changes for customizing, commit the changes and push them back to the master branch.

- Jenkins is used for deployment. A defined task checkouts the git code, generates the release (the war file) to be deployed in a custom Docker image. This docker image is obtained from Nexus 3 repository.

- Nexus 3 is used as a centralized repository for private Docker images, and working also as a proxy for public Dockerhub ones.

- Sonarqube is a continuous code quality tool, used in the release generation process.

- And finally, we use an Apache frontend as reverse proxy to publish our secured application in the Internet.

The lifecycle is represented in the following figure:

Let’s logger.log some details tool by tool:

gitlab:

The starting point was CAS 6 overlay project, which is used for creating a customized CAS project in gitlab.

$ git clone https://gitlab.zylk.net/dockyard/cas-6-ldap.git $ cd cas-6-ldap # We use gradle for several customizations $ build.sh getview footer $ build.sh getview header $ build.sh getview casViewLogin $ build.sh getresource messages $ build.sh getresource messages_es $ build.sh getview loginform (do customization) # git add . # git commit . -m "Adding changes to custom CAS 6" # git push -u origin master

Some partial tree view of the project will be like this:

├── docker-compose.yml

├── Dockerfile

├── etc

│ └── cas

│ ├── config

│ │ ├── cas.properties

│ │ └── log4j2.xml

│ ├── services

│ └── thekeystore

└── src

└── main

├── jib

│ └── docker

│ └── entrypoint.sh

└── resources

├── cas-theme-default.properties

├── messages_es.properties

├── messages.properties

├── static

│ ├── css

│ │ └── cas.css

│ └── js

│ └── cas.js

└── templates

├── casLoginView.html

└── fragments

├── footer.html

├── header.html

└── loginform.html

Additionally we added our custom Docker resources to the project.

Dockerfile: It uses a slim image for compiling the war file, and a different tomcat image for deploying.

FROM openjdk:11-alpine as build

ADD . /cas

WORKDIR /cas

USER root

RUN set -x

&& sh ./build.sh copy

&& sh ./build.sh package

FROM tomcat:9-jre11-slim

COPY --from=build /cas/build/libs/cas.war /usr/local/tomcat/webapps

docker-compose.yml: We set the Docker image to use / compile, the published port(s), volumes and extra hosts. It refers to previous Dockerfile, and it will be used in portainer infrastructure for managing the deployment.

version: '3.4'

services:

cas6:

image: zylk/cas:6

ports:

- 9009:8009

extra_hosts:

- "ldap.zylk.net:192.168.1.222"

In the compose, 9009 is the AJP port which will be proxied in Apache frontend and it also needed to define the Ldap server that is using CAS, as user directory.

Jenkins:

# first it clones the repo git clone https://gitlab.zylk.net/dockyard/cas-6-ldap.git # then deploys in docker infrastructure cd /home/zylk/stacks/cas6 docker stack rm cas6 # the docker image is compiled from our private repository docker build -t zylk/cas:6 . docker tag zylk/cas:6 nexus3.zylk.net/zylk/cas:6 # once compiles, we push the changes to nexus docker push nexus3.zylk.net/zylk/cas:6 # finally we deploy in portainer docker stack deploy -c docker-compose.yml cas6

Portainer: Docker Swarm is the engine where the custom docker containers / images are being executed, while Portainer is the interface for managing the deployments. Finally, we publish /cas secured context in the Apache frontend, using the exposed ports in portainer machine.

Links: